One of eBay’s strengths is its Feedback Forum where users are able to give open and honest feedback to each other after transactions are completed. The feedback results are open to all who want to view them. Users can discern whether or not they should buy from or sell to others based on prior feedback from the community. It is recorded using a score metric which can be set to “positive,” “neutral,” or “negative.” As well, feedback includes an open-ended text entry so that users can articulate specifics behind their score selection.

eBay assumes that a user is inherently neutral–that is, he is not good or evil. His lifetime Feedback Score starts off at zero (0). Whenever he receives a positive feedback post, his score increases by one; when he receives a neutral feedback post, it remains unchanged; and when he receives a negative feedback post, it decreases by one. All the while, a Positive Feedback percentage is calculated–much like a test grade in school.

The beauty behind the eBay feedback model is its ability to convey whether or not a user can be trusted and also to what degree the entire community agrees in that trust. All things equal, if given a choice to purchase from a user with a Feedback Score of 2 or another of 157, most will choose to buy from the latter. The only problem with the Feedback Score display is the star graphic, which is somehow tied to it. I have been using eBay for almost a decade and its variations still mean nothing to me. It only adds noise.

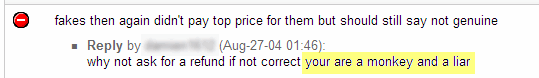

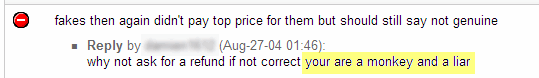

The more serious flaw is the open-ended text feedback. Users have to manually skim over textual entries to get a feel for why someone has been given a particular score. Many entries add no or little value to the scores they describe. When every seller and buyer on eBay has “A+++++++++++++!!!!” entries, the playing field is leveled inappropriately. Good textual feedback typically falls in one of four categories: customer service, promptness of delivery, quality of good sold, and whether the purchaser would buy from him again.

If the textual responses were closed-ended instead, the feedback system could provide a clearer picture into why a user is getting the Feedback Score he is getting by calculating totals in each category. For example, this particular user had a history of sending imitation products. Most users still gave positive feedback because everything else was stellar, including situations where products were returned. If the quality of good sold category had a low score, those only interested in genuine products would steer away from this seller. Feedback would be specifically aggregated and useful.

Another benefit to closed-ended feedback is the prevention of flame wars, where users participate in mutual verbal attacks on character. Flame wars are subjectively blind and often heated by emotion rather than reason.

They divide communities, and make them unappealing to outsiders. Closed-ended feedback options avoid flame wars by keeping discussions objective.

Good metrics are devoid of emotion, and good metrics result in better decisions.